A ✨Deep Review✨ on Georgia Tech’s Deep Learning course.

How deep is your love for deep learning?

How deep is your model, can it go any deeper?

Now that you have completed the 7641 Machine Learning class (assuming you’re not naughty and have not skipped it), you can focus solely on neural network architectures and leverage their representational power to tackle much more complex problems.

Important note

- I took this class in Fall of 2024, the requirements of specific assignments could have changed for the upcoming semesters.

- If you have any doubts whether the suggestions below apply to your cohort, check with the TAs, I claim no responsibility over lost marks or points.

- Join the permanent Deep Learning Discord to ask any questions within the boundaries of the Student CoC / Honor Code.

Before we get into the nitty-gritties of discussing how to navigate the course, you need to get rid a couple of internal biases that can limit the derived learning.

Let’s clear the air

These are some of the most asked questions I can remember when being asked about this course - send more my way and I can edit it in.

-

Do I need CS7641 Machine Learning? The Reddit talk is scaring me aaaaahaha?! TAs are evil there!: While I do not agree with the latter statements and Reddit is always an echo chamber of thoughts, the CS7641 pre-requisite is listed in the syllabus and is necessary in my opinion. It is skippable iff you have knowledge on the following topics: bias-variance tradeoff (over vs underfitting), neural networks, backpropagation, why do we need ML? (reach Mitchell chapters 1, 2). The first assignment itself assumes this knowledge and asks in their theory question about over/under-fitting.

- TL;DR: While the Machine Learning class covers lot of necessary breath imo, it can be skipped if you have done some prior ML class on Neural Networks, eg: Ng course.

- You can see what syllabus recommends here.

-

Calculus is no joke: Prior to taking this course and looking at the pre-reqs, I binge-watched the following playlists in a span of 10 days, oh the pain…

- 3b1b: essence of linalg

- 3b1b: essence of calc

- Machine Learning for Engineering & Science: This playlist covers a lot of math initially.

- Most important is just understanding linear algebra and how to derive and then carry forward this to matrices: The Matrix Calculus You Need For Deep Learning. This is the essence of Backpropagation.

- Math for ML textbook

- You will miss this.. Maybe not: Studying all this math helped when doing things initially from scratch (on small/medium) but not necessarily once the course switched to PyTorch as it abstracts away all this calculus goodness from you. I hold a lot of respect now for people who wrote autodiff libraries, I salute you.

- Now that the class has ended, I’m revisiting probability (for GenAI ofc and to better understand VAEs/the likes). This course has really cleared a lot of foundation for me, An Intuitive Introduction to Probability

- Other resources I did not get a chance to look into:

Can You Derive?

This was me after I had binged these many videos and practiced a lot of problems that led to a 25/26 on Quiz 1. Yes, I’m shamelessly bragging.

-

GPU use: Most of the assignments (and final project) utilize PyTorch which in turn, allows you to use GPUs to train your models ✨faster✨. I utilized colab throughout the semester and even paid for a couple of compute units, you could look into Lightning AI. Lightning AI has a better interface and you can ssh into the VMs they provide using VSCode/PyCharm/Whatever. The use of GPUs is not necessary but it will definitely save you a lot of time.

-

Do not miss the OHs: There are 4 different types of office hours (OH) that occur throughout the semester:

- Assignment Overviews: DO NOT MISS THESE. While I could not watch them live since all of the OHs were always odd-hours for me, I watched the recordings. They really help clear the assignment requirements and there are some helpful tips that would save a lot of time.

- TA led OHs: No structure, random talk, ask a question. I attended a few, they were helpful but you could skip them.

- Instructor led OHs: I never watched any.. for some reason the schedule was always tough for me to join these.

- META/Facebook OHs: I joined a couple. This one has industry experts hold a Zoom conference where you can discuss and ask questions. There was a set presentation or topic at hand but it was mostly a random mix of topics being discussed.

-

Start early: This advice is not new but it cannot be stressed enough. Each assignment comes with its unexpected challenges and it takes time for your mind to absorb and understand new information and at the same time tackle the assignment.

The Lectures - Not the Best

Georgia Tech Lectures

The Georgia Tech Lectures by Dr Kira cover the topics well, you can view them on the Mediaspace link if you haven’t enrolled yet here. However, they are tough to understand and really make you feel sleepy as it feels like someone is reading from a script.

It would be best to use Dr Justin Johnson’s Deep Learning for Computer Vision lectures which feel much more lively and are easy to digest due to their conversational style. I often had to view the videos in the playlist and then use that context to easily understand what the Georgia Tech DL lecture was attributing to.

Meta Lectures

I really didn’t find most of them helpful. They were very abstract and lacked depth in content. Resources on YouTube taught it much better.

The Quizzes - A Grade Deflation Tool

Before I entered this course, I heard a lot on how the Quizzes are “mini”-exams as some people would call it.

Warning

Here’s what the quizzes will feel like even if you study hard. Enjoy the new & updated Chacarron from the ML review.

My experience has been similar for the Quizzes. While the percentage is small, the syllabus covers many topics and it feels like an excuse as a way to force you to review the course-material aggressively and not just complete the assignments and call it a day.

I prepared a lot for some of the Quizzes and I think this is where the most learning happened for me personally. So, this cliffhanger technique works to say the least.

What I would change if I was taking DL again would be to do the assignments’ theory questions and readings as a way to prepare for the Quizzes.

Helpful Resources

Apart from the ones listed in my Deep Learning Prep page, these are some playlists & books that I found helpful and can be perused to develop a deeper understanding.

Video Playlists

- Deep Learning for Computer Vision: A 1:1 (mostly) replacement for GT lectures. Should be of main focus to substitute GT lectures.

- 100 Days of Deep Learning - CampusX - in Hinglish: This playlist helped in understanding some complex topics but at times there is too much spoonfeeding which I found annoying, haha.

- CS224N NLP Stanford: For NLP topics, don’t go too into depth. Just touch the topics being taught since DL doesn’t focus heavily into NLP stuff.

- MIT OCT Matrix Calculus for ML and Beyond: I binged the first 4-5 videos as prepatory work. I found some of the discussion and tasks to be helpful. Will definitely come back to watch more in the future.

- Yann LeCun’s Deep Learning course: Some of the videos were very good. Especially the attention one.

Books

I referred these books ON and OFF throughout the semester for specific topics.

- Deep Learning - Ian Goodfellow: The book is technical and really well written. Nothing short of what you would expect from the father of GANs!

- Deep Learning - Chris Bishop: This book covers more latest topics. You may remember Dr Bishop from the famous book PRML.

- Understanding Deep Learning - Simon J.D. Prince: This book has great visuals. Really helps in building that latent space (intuition) inside your head.

Assignment Requirements

Unlike ML where there are really fun adventures, the DL assignments is very straightforward.

Most of the assignments follow the same pattern:

- Finish some theory questions - mix of computational and theory

- Choose a research paper, write a review, give your thoughts

- Write code and pass tests on Gradescope

- Record code experiments and explain them in the repo

The grading is very straightforward. You only lose points if you fail to answer the question properly.

Assignment 1 - Neural Nets

- Library to use: NumPy, Matplotlib

- Resources | Do Not Miss:

- Assumed Priors: If you’re coming from the ML class, you will be fine, the first assignment covers the basics: activation functions, loss functions, cross-entropy, accuracy, regularizaiton, etc. And it goes without saying that mathematics (esp linalg and differeniation should be clear to you, if not - refer Gilbert Strang for linalg and 3b1b for differentiation). If you are not coming from the ML class, you may be able to complete the assignment but I do not think you will be able to grasp the concepts well given the time constraint.

- Code Recommendations:

- np.einsum: This NumPy function would really make your matmuls easier. You don’t have to waste time debugging to match the matrix dimensions. It can handle almost any tensor operation (dot product, element-wise multiplication, matrix transpose, etc.).

- Einsum Is All You Need: Apart from the copied title from the Attention paper, this can explain how einsum works.

- np.tensordot: Same as einsum but faster. It is limited to dot products and contraction along axes.

- np.einsum: This NumPy function would really make your matmuls easier. You don’t have to waste time debugging to match the matrix dimensions. It can handle almost any tensor operation (dot product, element-wise multiplication, matrix transpose, etc.).

This assignment is a nice primer on how backpropagation occurs from a code perspective. You will be implementing a simple and a 2 layer fully-connected neural net from scratch using NumPy.

This assignment also explains how automatic differentation (autodiff) works. It was really fascinating and inspires me to someday build a small autodiff library of my own as a fun project, eg: MyGrad - autodiff for NumPy.

Assignment 2 - Convolutional Neural Nets (CNNs)

- Library to use: NumPy, Matplotlib, PyTorch (for part 2)

- Resources | Do Not Miss:

- Code Recommendations:

- np.einsum: This NumPy function would really make your matmuls easier. You don’t have to waste time debugging to match the matrix dimensions. It can handle almost any tensor operation (dot product, element-wise multiplication, matrix transpose, etc.).

- Einsum Is All You Need: Apart from the copied title from the Attention paper, this can explain how einsum works.

- np.tensordot: Same as einsum but faster. It is limited to dot products and contraction along axes.

- numpy.lib.stride_tricks.as_strided (OPTIONAL): This can help vectorize your operations as you can make a view of your image matrix instead of using for loops for everything.

- numpy.unravel_index (OPTIONAL): Again if you want to vectorize, it can help.

- Pytorch: how and when to use Module, Sequential, ModuleList and ModuleDict: Really helpful to look at and appreciate since it will save you time when you are building really deep networks.

- np.einsum: This NumPy function would really make your matmuls easier. You don’t have to waste time debugging to match the matrix dimensions. It can handle almost any tensor operation (dot product, element-wise multiplication, matrix transpose, etc.).

This assignment covers convolutional neural networks (CNN).

This assignment covers the following tasks in brief:

- Implement CNNs from scratch using NumPy

- Implement the same CNN using PyTorch

- Use PyTorch to build out your own CNN implementation and compete with other students on a leaderboard.

- Experiment with a different loss function (CB & Focal Loss) and report your findings.

Pytorch

For PyTorch, there is a tutorial in the lectures. I did not find it until the end of the course as it is under ‘Facebook Resources’. It basically covers the content of this repo: CS7643 Deep Learing | Fall 2020 at Georgia Tech (Prof. Zsolt Kira).

Once you start using PyTorch you will appreciate how it really does everything for you ⇒ building out the DAG for autodiff, all activation functions coded, etc.

My script for experiment logging

I built out a MLFlow wrapper that wraps onto the PyTorch Solver.py for this assignment. This will help you track and store either locally or on Databricks your experiments.

Fun fact: my Databricks account got suspended because I was logging the whole Solver object which was around 1.2 gigs, haha. Definitely recommend you use it locally (or colab/lightning) instead.

Info

This really helped me save time and I could reference it directly in my report. You can see how it looks in the SS with the hyperparams hidden. (not gonna help you ofc :))

You can view the code here. Please ⭐ if it helps you.

Interesting paper

In ML, we learnt all about the inherent biases in our algorithms. Especially in A2, where we saw how optimization algorithms tread the problem landscapes very differently.

Similarly, in this assignment, one of the paper choices which I picked to review was quite interesting. The authors explored how like us humans have biases in looking at images, similarly CNNs exhibit a bias too. And it does not end here, they further talk about training the model to change its bias??! Qualitative analysis has always been very fascinating 😄

Qualitative analysis breaking minds everytime.

Get ready to have your mind blown —insert gif here—

Assignment 3 - Interpreting CNNs (Removed from Spring 2025 onwards)

Info

This is an old assignment and is now removed starting from Spring 2025.

- Library to use: PyTorch, Captum, Matplotlib

- Resources | Do Not Miss:

In this assignment, your adventures on implementing things from scratch end. Good news: No more shape matching, building out computation graphs, computing gradients, YAY. :)

This assignment tries to dive into what people call “xAI” explainable AI, i.e. why do CNNs (or model in question) give out an output X when presented with an image?

It was the easiest and least time consuming but fun nonetheless. The style transfer and class-model visualization gave a nice taste of what GenAI looks like.

This assignment covers the following tasks in brief:

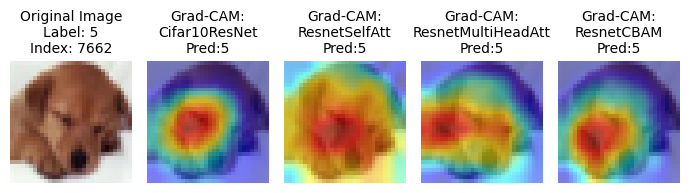

- Visualizing what CNNs learn: Saliency Maps, GradCAM, Guided Backpropagation

- Fooling images: how can CNNs be fooled with small perturbations in our input

- Generate images using class model viz (gradient ascent): generate images by maximizing activation of specific output classes

- Style transfer: preserve an input images structural content but mix-in style pattern of another image.

Style transfer

A stylized style-transfer image of Burj Khalifa.

Class maximization using Gradient Ascent

A synthetic Gorilla image generated via gradient ascent on a SqueezeNet model pre-trained on ImageNet which highlights features that the neural network associates with a gorilla (our chosen class).

Assignment 3 - Sequences (Previously Assignment 4)

Info

This was previously A4. From Spring 2025, this is now A3.

- Library to use: PyTorch, Matplotlib

- Resources | Do Not Miss:

- UMich

- StatQuest

- On Latent Spaces/Latent Vector/Hidden Vector

This assignment covers seq2seq encoder decoder models. We implement a translation model to translate German to English.

We start by implementing RNNs, noting it’s drawbacks and move to LSTMs, augment both RNNs and LSTMs with Attention and compare our results. Next, we look at how Transformer models don’t need this autoregressive approach (of RNN & LSTMs) and can generate outputs in batched format which allows for better scaling/efficiency/parallelizability.

Initially, the compression of the input to a latent vector did not make much sense to me. It only started to make sense to me when I saw denoising autoencoders. It was very fascinating to see that you could denoise MNIST images by encoding the input vector/image into a compressed latent space and then reconstructing a clean version thanks to optimizing through backprop.

This idea of latent space is carried forward to Attention wherein the compressed latent vector of our token (here the input is not an image but text which is represented as tokens) is moved (in a linear algebra fashion - basically a linear transformation which is again just a matmul 😊) to the correct position so they can contextually relate to each other. Look at the last video link in the resources above.

Assignment 4

Info

This is a new assignment on Generative Modeling added in Spring 2025.

In this assignment, you implement various generative models, namely: - Variational Autoencoders (VAEs) - Generative Adverserial Networks (GANs) - Denoising Diffusion Probabilistic Models (DDPMs)

I don’t have any further information as I took this class in Fall 2024. However, it’s a bit funny and coincidental that I was writing my own GAN on FashionMNIST - 2 weeks prior to release of this assignment in Spring 2025.

Group Project

Pick any project you like, there’s no end to how deep you can go or how ambitious you’d like to be.

The main idea is that you should be able to represent through your project the built-up latent vector inside your head, i.e. Deep Learning knowledge.

I have a few recommendations that could be of help:

- Try to make groups with people you know and gel well with. Find people from your past class, e.g. ML

- Try to find people in similar timezones. If you’re in Asia, don’t pick people in the US. It becomes really tough to manage.

- Try not to be too ambitious. Factor in training times when picking a task to solve.

Take this seriously as it accounts for a good chunk of your grade.

My project

For our project, we were a group of 3. Given the time constraints and how busy our schedules were, we tried not to pick a complex project and work with images since that felt the most comfortable.

We evaluated a not so deep ResNet-20 by augmenting three different Attention mechanisms and interpreting the results through GradCAM. We were happy to see some satisfying results. The Paper: CNNtention: Can CNNs do better with Attention?

Congratulations!

You can now finally endorse this meme and have a Twitter war with people making GPT wrappers! 😠

My scores

| Name | Score |

|---|---|

| Assignments | 444.58 / 451.00 |

| Quizzes | 93.08 / 131.00 |

| Final Project | 55.00 / 62.00 |

| Ed Endorsed Posts | 1.00 / 1.00 |

| Overall | 92.10 % |

Try to do well on all the assignments and the final project, that should account for any problems you may face in the quizzes.

The Ed Endorsed Posts was just given to 3 people 🤯 in the class for being “very helpful” I’m assuming. So, if you’re active on Ed and sitting on the borderline of 88, that 1 point could raise your grade to an A.

I did help out on Ed but in my honest opinion, it was mostly me being on Ed when I had nothing better to do with my time. I was wasting my time with bakchodi on Ed/Discord. At least I got rewarded, hahahaha.

Other interesting text to review

- My dear friend

Inspector Yxlowalways has good stuff to talk about. - There exists a Google Doc written by the

OG Murilothat has some great resources that even I did not know about.

Comments

Have some feedback or found any mistakes? Most of you would already know where to find me.

If not, you can e-mail me by clicking here.

Changelog

- [26.12.2024] Init

- [03.01.2025] First draft completed

- [15.03.2025] Added new Spring 2025 assignment.